FTM / Connectivity / Future Electronics — Sensor-Based Machine Learning

By Martin Schiel

EMEA Vertical Segment Manager (Embedded Computing), Future Electronics

Read this to find out about:

One of the results of the maturing of technologies such as the IoT, augmented reality (AR) and cloud computing is the rise of the smart factory. An increasingly familiar sight in the smart factory is the co-operative robot, or cobot. Cobots already play an important part in smart manufacturing, and are set to take on more functions and provide greater value in the factory as time goes on.

As smart factories come to depend more on cobots for their basic functioning, so it becomes more important that they operate reliably, with no unplanned downtime. This is leading the manufacturers of cobots to enable predictive maintenance in their products: this gives users an early warning of emerging faults which could eventually impair the operation of the cobot. It provides the opportunity to fix faults in planned maintenance time without the disruption caused by an unexpected machine failure.

In a cobot, predictive maintenance systems rely on sensors which can detect small abnormalities in the motion of limbs and joints, and in the motors which drive them:

The application of machine learning technology, a branch of artificial intelligence (AI), enables the cobot to detect divergence in the patterns of vibration and sound from a reference point set when the cobot was new or in a known undamaged condition. Analysis of anomalous patterns can enable the system to diagnose incipient faults, and to trigger a request to the factory management system to plan repair and maintenance.

In early implementations of machine learning, the complex neural networking algorithms by which patterns in sensor signals are recognized would typically run remotely in an embedded computing system based on a powerful microprocessor.

Such a centralized system, however, processing inputs from a large fleet of cobots, places a heavy burden on the processing infrastructure, resulting in high power consumption, and occupying a large amount of the bandwidth in the network which connects cobots to the central control system.

Now the emergence of a new generation of sensors with embedded AI capabilities offers cobot manufacturers a new way to implement local machine learning. And using tools and software provided by STMicroelectronics, a pioneer in the development of machine learning sensors, cobot design engineers can take advantage of a new, simpler way to build predictive maintenance capability into their products.

Broad range of MEMS sensors for vibration and ultrasound measurement

ST offers one of the industry’s largest portfolios of MEMS sensors, including accelerometers, IMUs, pressure sensors, and microphones. The sensing elements are manufactured using specialized micromachining processes, while the IC interfaces are developed with specialist CMOS technology. This enables the design of a dedicated circuit which is trimmed to match the characteristics of the sensing element.

This technology underpins the high performance of the IIS3DWB, for instance, a three-axis ultra wide-bandwidth MEMS accelerometer, an ideal device for detecting the vibrations generated by a faulty machine. ST also supplies motion sensor modules based on its MEMS sensor ICs: for instance, the ISM330DHCX is a system-in-package featuring a high-performance 3D digital accelerometer and 3D digital gyroscope tailored for Industry 4.0 applications.

Machine learning based on decision tree logic

The ISM330DHCX is one of the ST MEMS sensor products which includes embedded AI capability in the form of a Machine Learning Core (MLC). This machine learning capability allows the system operator to shift some predictive maintenance algorithms from a central application processor to the sensor, where the dedicated MLC consumes much less power.

So how does a sensor’s small, low-power processing logic block provide the machine learning capability which normally calls for a large, power-hungry applications processor?

The answer is in the decision tree logic which ST embeds in its intelligent sensors: the decision tree algorithms which ST supports are simpler and so consume far fewer instruction cycles, and power, than conventional neural networking algorithms.

A decision tree is a mathematical tool composed of a series of configurable nodes. Each node represents an ‘if-then-else’ condition, for which an input signal, that is, the quantitative values calculated from the raw sensor data, is compared to a threshold value.

The ISM330DHCX can be configured to run up to eight decision trees simultaneously and independently. The decision trees are stored in the device and generate results in the dedicated output registers. The results of the decision tree can be read by a host microcontroller or application processor at any time. The sensor can also generate an interrupt for every change in the result produced by the decision tree.

How decision tree logic operates

The decision tree’s predictive model is built from a set of training data, and is stored in the ISM330DHCX. The training data are logged during the operation of the cobot in its desired state, that is, when in good condition, with no faults.

The decision tree is the method by which the MLC analyzes common features in raw sensor data. These common features will form the basis of the ‘model’ with which the sensor will compare the cobot’s operations over time. If the sensor outputs match the model with a high degree of certainty, then the cobot is fault-free. If the sensor cannot match its real-time measurements to the model, it indicates a potential fault, triggering an alert to the machine operator.

Each node of the decision tree contains a condition in which a feature is compared against a certain threshold. If the condition is true, the next node in the true path is evaluated. If the condition is false, the next node in the false path is evaluated, as shown in Figure 1. The status of the decision tree will evolve node by node until a result is found. The result of the decision tree defines a ‘class’ of behavior: in a fitness wristband, such a class might be ‘walking’ or ‘jogging’. In a cobot’s predictive maintenance application, different classes appropriate to the workload of the cobot apply.

Fig. 1: A decision tree is comprised of multiple nodes

The decision tree generates a new result for every time window, the length of which is set by the user so as to capture the characteristic features of the class of activity in question. The results can also be modified by an additional, and optional, filter called a meta-classifier which applies internal counters to the outputs from the decision tree.

The classes of activity identified by the MLC, in the form of filtered or non-filtered decision tree results, are accessible through registers of the ISM330DHCX module.

Getting started with the MLC

To design engineers who have implemented machine learning in a conventional centralized architecture, or who have never implemented machine learning before, the decision tree method for building machine learning models might appear unfamiliar or difficult. But in fact the operation of the MLC is simple and easy to learn, because of the excellent set of evaluation and development tools and resources provided by ST.

The foundation of the ST platform for evaluating the MLC on products such as the ISM330DHCX is the STWIN Wireless Industrial Node, part number STEVAL-STWINKT1B, shown in Figure 2. Aimed at machine learning and predictive maintenance applications, the STWIN is supported by three examples of decision tree models available on Github:

The method for using them is the same for all three. To help the design engineer to get started with the ISM330DHCX’s MLC, let us look in detail at the application of the decision tree for 6D position recognition. This can be used in a cobot or other robotics application to independently detect the position of a robot arm and compare it with the results from position sensors, a process typically required in robust safety mechanisms.

Fig. 2: The STWIN evaluation board from STMicroelectronics

The implementation of 6D position recognition on the STWIN platform proceeds in a series of steps.

Step 1: Introduction

A simple example of 6D position recognition is implemented using the sensor’s Mean feature, either signed or unsigned, on each axis of the accelerometer’s data outputs.

The software supplied by ST can recognize the following positions:

Step 2: Sensor configuration and orientation

The accelerometer is configured to operate on a ±2 g full scale, and at an output data rate of 26 Hz. Any sensor orientation is allowed for this algorithm.

Step 3: MLC configuration

The two features, mean signed and mean unsigned, are applied to all the axes of the accelerometer outputs. The MLC runs at 26 Hz, computing features on windows of 16 samples, equal to a duration of 0.6s. A decision tree configured to detect the different classes of behavior consists of around eight nodes. A meta-classifier is not used in this ST application example.

To run the example on the STWIN board, the engineer needs to use ST HSDatalog software. HSDatalog is a high-speed command-line application for data logging. It also enables the user to configure the MLC of the ISM330DHCX, and to read the output from the selected algorithm.

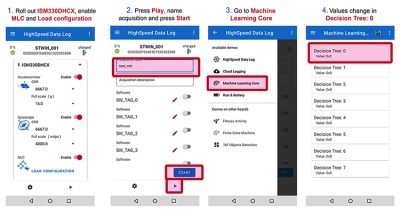

To run MLC example software through the STWIN board and HSDatalog:

Fig. 3: How to program the STWIN board

Next, visit ST’s Github page for MLC examples and download the appropriate .ucf file. Load this configuration file to the ISM330DHCX device on the STWIN board, shown in Figure 4, Load configuration step.

Fig. 4: Running the HSDatalog MLC software in ST’s BLE Sensor app

Now the system is ready to run according to the instructions shown in Figure 4. The outputs from the decision tree can be viewed as a register value of ‘MLC0_SRC (70h)’. They are also shown in the BLE Sensor app, as shown in Figure 4.

In the ST application example, the following values represent the various 6D positions:

0 = None

1 = X-axis pointing up

2 = X-axis pointing down

3 = Y-axis pointing up

4 = Y-axis pointing down

5 = Z-axis pointing up

6 = Z-axis pointing down

The configured software generates a pulsed, Active High interrupt on the ISM330DHCX INT1 pin every time the register MLC0_SRC (70h) is updated with a new value.

Going further with ST’s MLC

The 6D position application example provided by ST provides an intuitive way to understand the operation of the MLC in the ISM330DHCX. Cobot designers will soon want to explore its functionality and capabilities further. They are supported in this by a wealth of information and resources available online from ST. These include the application note AN5392 about the ISM330DHCX MLC, and the Design Tip DT0139 for decision tree generation. Information about the high-speed data log function pack is available in the user manual UM2688.

And technical support is also available to Future Electronics customers via applications engineers located in branches globally.

Development board

Parts supported: ISM330DHCX, IIS3DWB, STM32L4R9ZIJ6, STSAFE-A110, IMP23ABSU

Board part number: STEVAL-STWINKT1B

Description:

The STWIN SensorTile wireless development kit is a reference design which simplifies the prototyping and testing of industrial IoT applications such as condition monitoring and predictive maintenance.

The kit consists of a core system board, a 480 mAh lithium-polymer battery, an STLINK-V3MINI debugger, and a plastic box. The core system board features a range of embedded industrial-grade sensors and an ultra low-power microcontroller. Backed by software packages, firmware libraries, and a cloud dashboard application, the STWIN SensorTile performs analysis of motion-sensing data across a wide range of vibration frequencies, including very high-frequency audio and ultrasound spectra, and implements local temperature and environmental monitoring.

Share This

Get access to the latest product information, technical analysis, design notes and more

Sign up for access to exclusive development boards, an essential tool for many innovative design projects.

*Available to pre-qualified EMEA customers only.